There are very few productions today that don’t use some kind of VFX. It has become an everyday facet of TV and movie production. And, to the uninitiated, the plethora of jargon can be confusing. Something in particular that comes up often is 2D vs 3D VFX.

On the surface, it sounds like 2D is akin to cartoon animation and 3D is more Pixar-esque CGI. But this couldn’t be further from the truth. When we talk about 2D or 3D VFX solutions, we’re talking about techniques that can be accomplished in either a 2D or 3D space. Still confused? Let’s explore the topic further…

2D vs 3D VFX Solutions

In reality, this isn’t an issue of one versus the other. Both 2D and 3D solutions have their place in the VFX world. There will be some instances where all you need is to use some 2D techniques, such as a 2D matte painting, to add to the scene. Other times, you’ll need an entire 3D model for your production. And sometimes you’ll need to use both at once.

As you can probably guess, 2D effects work in a 2D space, which means there isn’t any “depth” or “dimension” to it. They’re useful if the camera is moving on an X and Y axis (left and right and up and down). 3D, on the other hand, adds more depth to the shot and are used when they’re likely to be front and centre and under more scrutiny. So 3D visual effects are more often used to create CGI characters or other objects (such as vehicles, planes, or spaceships) that are integral or important to the story being told.

2D

Let’s go into more detail about some of the more common 2D VFX solutions and techniques.

Matte painting

Matte paintings are one of the oldest techniques in the book. In the past, they used to be painted onto glass. Today, we can use digital painting software to create something that looks incredibly realistic. Often 2D matte paintings can be created using still images or elements from still images. However the effect is accomplished, the goal is the same: to create a convincing background for the shot.

Beauty and de-ageing

2D paint techniques can be used to remove blemishes, bags, and wrinkles on actors but also to age actors or make them look younger or older. Increasingly actors are being aged and de-aged to help tell stories cover long time periods.

Crowd replication

2D compositing can be used to create crowds for indoor and outdoor scenes. By shooting plates with a relatively small number of extras, a relatively empty cinema, theatre, or football stadium can be made to look full.

Muzzle flashes

These are a staple of many TV shows and films and are easily achieved using 2D visual effects. If suitable elements can be sourced, almost any kind of weapon or artillery can have a muzzle flash and smoke added.

Blood and gore

Many shows require blood or gore to be either added or enhanced to actor’s faces and bodies. In most instances, with good elements, this can be achieved with 2D effects.

Tracking

Tracking in a 2D sense is about following objects on-screen on the X and Y axes. With 2D tracking, you’re likely to be following a number of points in the image, something which can be made easier by placing dots or tracking markers during filming.

3D

3D VFX haven’t been around as long as some of the techniques used in 2D VFX, but you can achieve everything that can be done in 2D with 3D. Here are some common 3D VFX solutions:

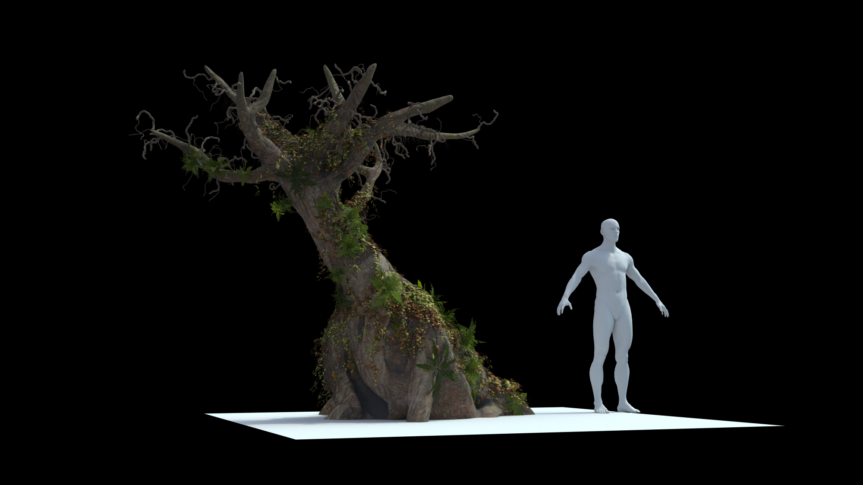

Assets

Your CG assets will most likely be 3D. They will need to blend into the scene so need to appear as if they are at home in a 3D space. In today’s world, even the most innocuous of items will be CG assets. It could be a giant spaceship, or it could be a futuristic gun. It could even be a cardboard box if you need it to be.

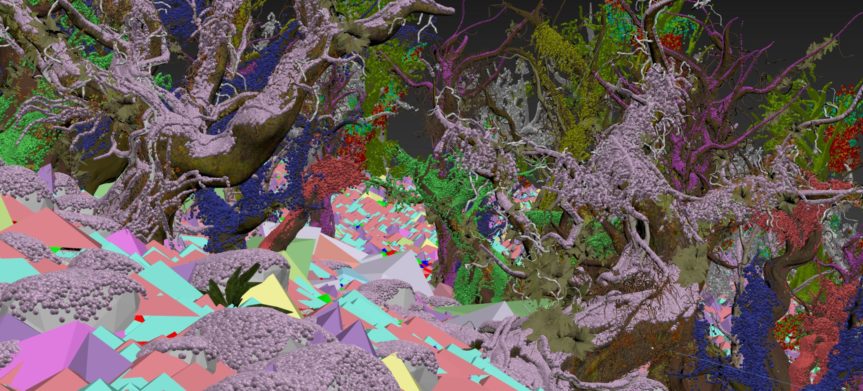

Match moving

This is another term for 3D tracking. It involves extracting the camera movements from the live-action shoot and replicating it in a CG environment. There was a time where this process was laboriously done by hand but with advances in post-production software, this is much simpler. This is a necessary process if you want any 3D assets to move with the shot as if it was always there.

Particle effects

When you need to go even smaller, you can use particle effects. These can be added after filming to prevent them from being cumbersome for your actors. So if you have a fire in a scene, you can create realistic-looking smoke or embers. If it’s supposed to be a winter scene, add snow. Is it raining? You can create individual raindrops. Modern systems are capable of creating particle effects that appear and disappear over time, as they would if they were real.

Lighting

This is used in tandem with your 3D assets. To make them look as if they are actually in a scene, the lighting and shadows need to match the environment around them. It also adds depth and helps cement the asset in the scene.

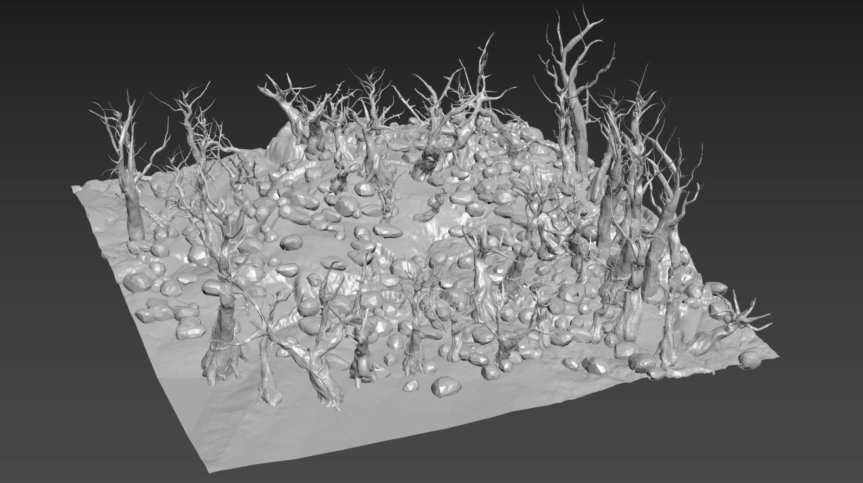

3D matte painting

You aren’t seeing things – matte painting is in both the 2D and 3D section. A growing area in 3D VFX, 3D matte paintings are a way of creating a 3D environment illusion by creating a layered digital painting. In the past, they would layer different panes of glass, but now we can use digital programs to create simple 3D geometry that matches the environment. It saves you the effort of creating incredibly detailed 3D models if the shot doesn’t call for such level of detail.

2D and 3D

All of these techniques are integral to post-production VFX. Different situations will call for a mixture of them all. Maybe you need a matte painting to show a war-torn London in the background while your 3D alien wreaks havoc in the foreground.

While most shows will require some 3D VFX, the majority still use 2D visual effects for their shots. The amount of 3D you have versus 2D will depend on your budget and the time available. If you project has lots of 3D elements – such as characters, assets, or even environments – you will need more time and budget to achieve them

What solutions you need will depend entirely on your individual circumstances. Today, it is much cheaper and quicker to use VFX to solve any problems you have.

If you need a VFX company to support you during your production, look no further than REALTIME. You can see our impressive CGI work in the upcoming BBC adaptation of War of the Worlds. If you would like to know more about what we can do for you, or if you’d like advice on 2D vs 3D VFX get in touch with me at [email protected].